For now, this guide will be for Windows specifically. Later on it will list alternative Unix-style commands (e.g. for WSL, Ubuntu, and macOS).

Let’s take this (overly) simple example:

WebdriverIO 6 with expect-webdriver

it('should include 50five in the page title', () => {

browser.url('/')

expect(browser.getTitle()).to.include('50five')

})

WebdriverIO 5 with Chai

it('should include 50five in the page title', () => {

browser.url('/')

expect(browser).toHaveTitle('50five', { containing: true })

})

When I run it, the test passes.

Does that mean we’re done?

I consider the shortest possible criteria for a good test to be:

- Is it meaningful?

- Does it pass when it should pass?

- Does it fail when it should fail?

The example test passes. Should it pass? When I manually check the page, the page title indeed contains ‘50five’, so it should pass.

Does it fail when it should fail? To verify this, I can edit the HTML in my local browser instance. I would have to stop test execution, edit the HTML in the browser, and then let the test run on the now incorrect page title.

First add a pause to the test, to be placed after the page to be tested is loaded:

it('should include 50five in the page title', () => {

browser.url('/')

browser.pause(30000)

expect(browser.getTitle()).to.include('50five')

})

I’ve assumed here that 30 seconds will be enough to make the change, but you can give yourself more time.

Once the page is loaded, we should:

- Open the browser console (F12)

- Ensure we’re on the Elements (Chrome, Edge) or Inspector (Firefox) tab

- Find the title tag (CTRL + F title)

- Remove | 50five.nl

- Select a different element, which will save the current one

- Wait for the test to run

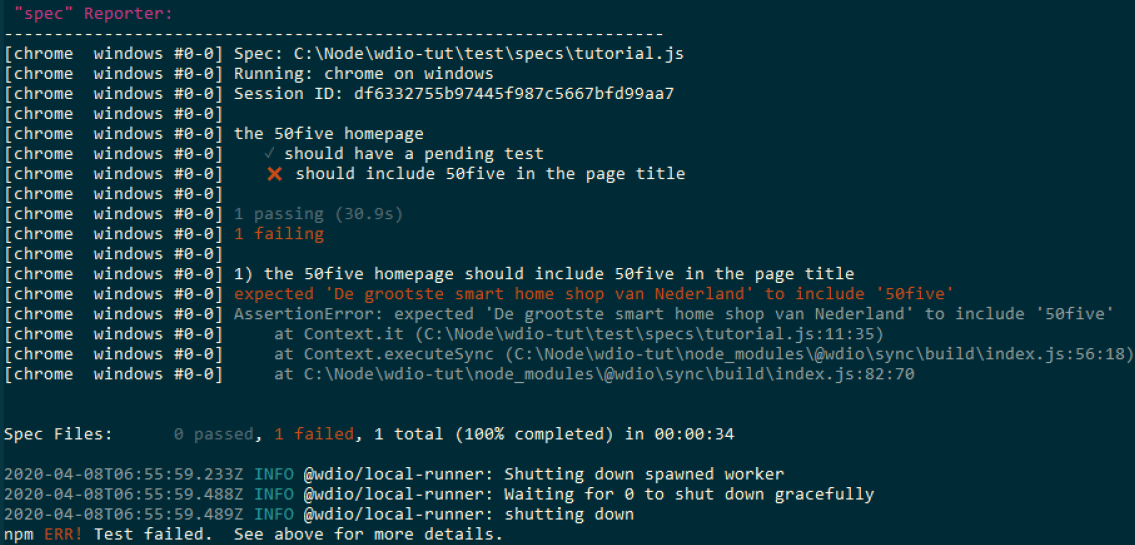

Expected: the test fails, and it gives useful feedback (e.g. “found X instead of Y”). Observed:

Sweet. This is what we want to see. You can now remove the pause from the test.

So, this means the criterion of failing when it should fail has passed.

The final criterion is: is it a meaningful test? How much confidence does it give us when we see it passes? When it fails, does that tell us about a real issue? Does the error message allow us to easily find the root cause? Taken one by one:

Confidence in the code: well, when the test passes it only really tells us that the homepage is still accessible. This is good to know, but this would be more effectively monitored through uptime monitoring tools.

A real issue is identified: not really. This is because the most likely cause of the page title not matching (aside from the site being down) is that a colleague deliberately improved it. At most it is possible that when trying to improve part of the page title, they accidentally removed the “50five” part, but in modern browsers the latter part of the page title is likely obscured in the browser tab anyway.

Makes debugging easy: it tells us what is wrong and where. The test is so simple and specific that it does not hide a different issue. This is good. Overall, the only thing this test has got going for it is that it’s easy to debug. We wouldn’t miss anything of value if we didn’t have this test. Since every test takes time to execute and every non-meaningful passing test could create false confidence in the application as a whole, this test should be removed.

What would be a more meaningful test?

Let’s check whether you can do a search using the header site search. This is commonly used by visitors of the site and takes them towards products and therefore hopefully towards adding them to their cart.

This is how we could do it:

describe('the 50five homepage', () => {

it('should show search results when using site search', () => {

browser.url('/')

searchBar = $('input#search')

searchBar.waitForDisplayed()

searchBar.setValue('Nest protect')

searchBar.keys("\uE007")

expect($('.search.results')).toBeDisplayed

})

})

Translation:

- Browser object, go to the

baseUrlat its root. - Find the input field for the site search, and add it to a variable

searchBarso we can use it later - Make sure the input field is visible, so we can interact with it

- Fill the input field with the search query Nest protect

- Send the Enter key to the input field, which submits it

- Check whether the search results grid is displayed, which should be the case on the search results page

With input fields you can also find the submit button and click that. In this example I chose to submit the Enter key, making the assumption that this is the more common way for a user to submit their search query and because we’re not worried about mobile here.

Run npm t. See it pass when it should pass.

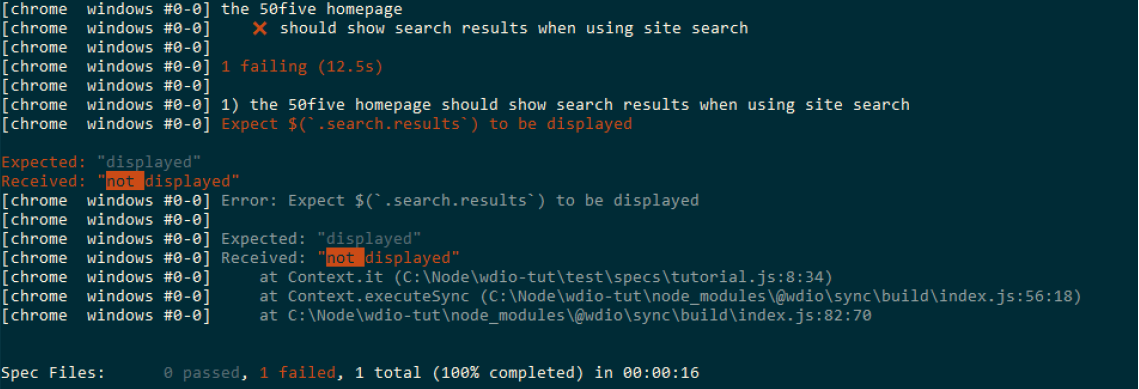

Comment out searchBar.keys("\uE007") (by adding // in front of that line). See it fail when it should fail.

Is it meaningful? Well, if it passes it tells us that critical functionality is working. Great. If it fails it tells us that either the site search is not present, or it cannot be filled, or it cannot be submitted, or it does not lead to search results. This is great to know about! However, it’s not perfect, since we’ll have to find out which one it is, but on the other hand it does tell us this in the results. For example:

This shows you can submit a search, but the results are not displayed (or of course it could always be the case that someone or something renamed the element selector on the page).

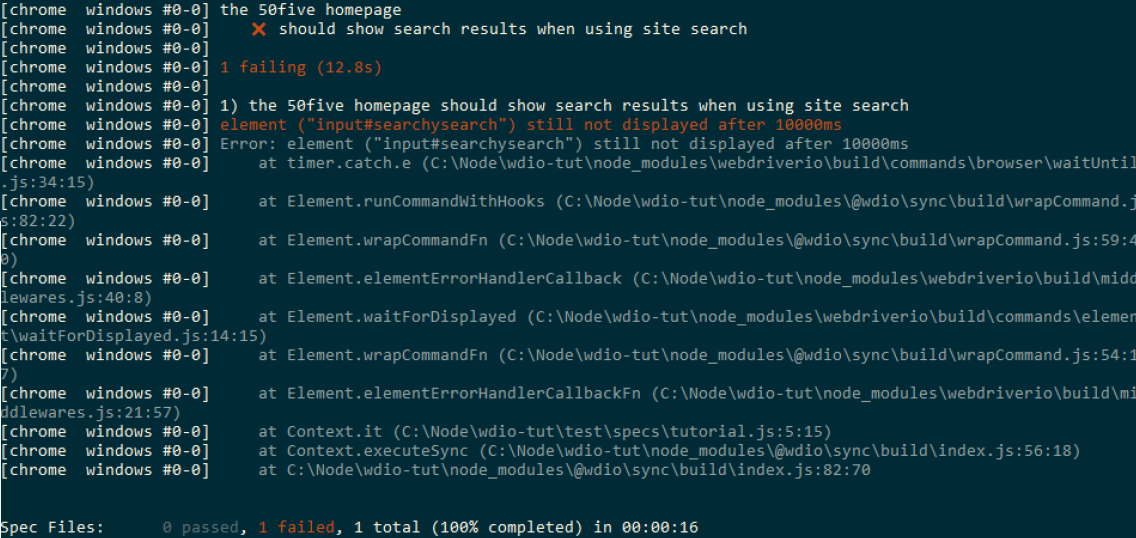

This shows there’s no site search on the page. (To make it easier to simulate this, I just looked for the wrong selector. I could have also just renamed the element in the HTML whilst the test was running.)

In conclusion, we now have a better test, using the criteria provided and by testing our tests!